Due to odd JAX issues

Anthropic published a blog post detailing three recent issues that affected a significant portion of their API users. This technical postmortem provides rare insight into the challenges of running large-scale AI services, and I appreciate their transparency. However, the details raised serious concerns about Anthropic’s engineering rigor.

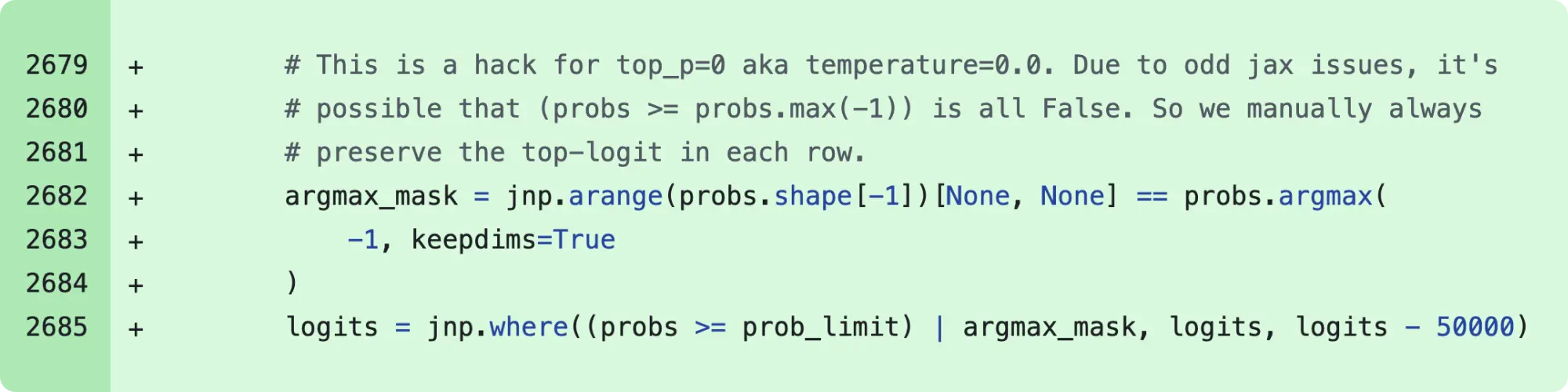

Consider this code snippet from their postmortem:

What a crude way to patch a third-party library bug!

After eight months, the team deployed a rewrite to address the root cause that necessitated this patch, which unfortunately exposed a deeper bug that had been masked by the temporary fix.

This cascade of failures reveals both inadequate testing infrastructure and insufficient post-deployment monitoring. They mention implementing more sensitive evaluations and expanding quality checks, but this reads like a car manufacturer promising to watch for accidents more carefully.

I’ve been a paying 20x Max member since the program launched. Despite the postmortem’s openness, I’m not convinced these issues have been resolved. In fact, I received several disappointing responses from Claude Code today that made me question my subscription.

Starting tomorrow, I’m switching to GPT-5-Codex as my main coding assistant, based on strong recommendations from engineers I trust. Time to see if the grass really is greener on the other side.